The advent of ChatGPT at the end of 2022 left everyone in awe of the capabilities of natural language processing chatbots (NLP). They can magically transform brief text prompts into coherent, human-like text, including papers, language translations, and code examples. Technology companies are also deeply attracted by the potential of ChatGPT and have started to explore how to apply this innovative technology to their own products and customer experiences.

However, compared with previous AI models, GenAI, due to its higher computational complexity and power consumption requirements, brings a significant increase in "cost". So, is the GenAI algorithm suitable for edge device applications where power consumption, performance, and cost are all crucial? The answer is yes, but not without challenges.

GenAI, with great potential in the edge end

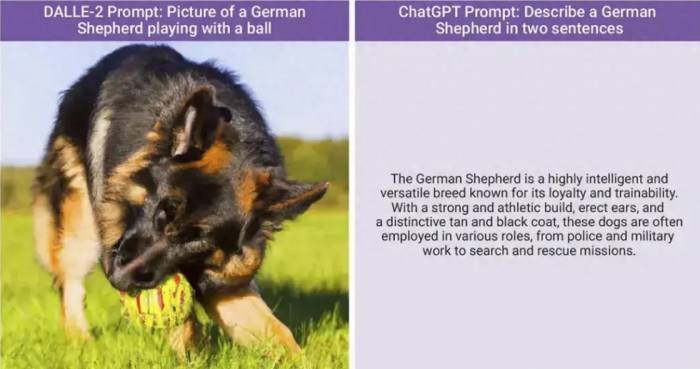

GenAI, that is, Generative AI, is a class of machine learning algorithms that can generate various content (including human-like text and images). Early machine learning algorithms mainly focused on identifying patterns in images, speech, or text and making predictions based on data. GenAI algorithms go a step further, they can perceive and learn patterns, and generate new patterns on demand by simulating the original data set. For example, early algorithms can predict the probability of a cat in a certain image, while GenAI can generate images of cats or detailed descriptions of the characteristics of cats.

Advertisement

ChatGPT may be the most famous GenAI algorithm at present, but it is not the only one. There are many GenAI algorithms available for use now, and new algorithms are constantly emerging. GenAI algorithms are mainly divided into two categories: text-to-text generators (also known as chatbots, such as ChatGPT, GPT-4, and Llama2) and text-to-image generation models (such as DALLE-2, Stable Diffusion, and Midjourney). Figure 1 shows examples of these two models. Because the output types of the two models are different (one is based on text, the other is based on images), their requirements for edge device resources also differ.

Traditional GenAI application scenarios often require an Internet connection and access to large server clusters for complex calculations. However, this is not a feasible solution for edge device applications. Edge devices need to deploy data sets and neural processing engines locally to meet key needs such as low latency, high privacy, security, and limited network connections.

Deploying GenAI on edge devices has great potential and can bring new opportunities and changes to fields such as automobiles, cameras, smartphones, smartwatches, virtual reality/augmented reality (VR/AR), and the Internet of Things (IoT).

For example, deploying GenAI in cars, since vehicles are not always covered by wireless signals, GenAI needs to run using the resources available at the edge. The application of GenAI includes:

Improving road rescue and converting operation manuals into AI-enhanced interactive guides.Virtual Voice Assistant: A voice assistant based on GenAI can understand natural language commands, helping drivers complete navigation, play music, send messages, and other operations while ensuring driving safety.

Personalized Cockpit: Customize the in-car atmosphere lighting, music playback, and other experiences according to the driver's preferences and needs.

Other edge applications may also benefit from GenAI. By generating images locally and reducing reliance on cloud processing, AR edge devices can be optimized. Additionally, voice assistants and interactive Q&A systems can also be applied to many edge devices.

However, the application of GenAI on edge devices is still in its infancy. To achieve large-scale deployment, it is necessary to overcome the bottlenecks of computational complexity and model size, and address the issues of power consumption, area, and performance limitations of edge devices.

The challenge is here, how to deploy GenAI to the edge side?

To understand GenAI and deploy it to the edge side, we first need to understand its architecture and operation.

The core of GenAI's rapid development is transformers, a new type of neural network architecture proposed by the Google Brain team in a 2017 paper. Compared with traditional recurrent neural networks (RNN) and convolutional neural networks (CNN) used for images, videos, or other two-dimensional or three-dimensional data, transformers have shown stronger advantages in processing data such as natural language, images, and videos.

The reason why transformers are so excellent lies in their unique attention mechanism. Unlike traditional AI models, transformers pay more attention to key parts of the input data, such as specific words in text or specific pixels in images. This ability allows transformers to understand context more accurately, thereby generating more realistic and accurate content. Compared with RNNs, transformers can better learn the relationships between words in text strings, and compared with CNNs, they can better learn and express complex relationships in images.

Thanks to the pre-training of massive data, GenAI models have shown strong capabilities, enabling them to better recognize and interpret human language or other types of complex data. The larger the dataset, the better the model can handle human language.

Compared with CNN or visual transformer machine learning models, the parameters of GenAI algorithms (pre-trained weights or coefficients used in neural networks to recognize patterns and create new patterns) are several orders of magnitude larger. As shown in Figure 2, the common CNN algorithm ResNet50 used for benchmark testing has 25 million parameters, while some GenAI models (such as BERT and Vision Transformer (ViT)) have parameters in the hundreds of millions.However, there are exceptions. Mobile ViT is an optimized GenAI model whose number of parameters can compete with the CNN model MobileNet, which means it can be used on edge devices with limited computing resources.

It can be seen that although GenAI models are powerful, they also require a large number of parameters to support them. Given the limited memory of edge devices, how can embedded neural processing units (NPUs) handle the processing of such a large number of parameters?

The answer is that they cannot.

To solve this problem, researchers are actively exploring parameter compression techniques to reduce the number of parameters in GenAI models. For example, Llama-2 offers a model version with 70 billion parameters, and even a smaller model with 7 billion parameters. Although Llama-2 with 7 billion parameters is still large, it is already within the range that embedded NPUs can achieve. MLCommons has added GPT-J (a GenAI model with 6 billion parameters) to its MLPerf Edge AI benchmark list.

Choosing the fastest memory interface is important.

Behind the powerful capabilities of GenAI algorithms lies a huge demand for computing resources and memory bandwidth. How to balance the relationship between the two is the key factor in determining the GenAI architecture.

For example, Vinson diagrams often require more computing power and higher bandwidth support, because processing two-dimensional images requires a lot of computing, but the number of parameters is not much different (usually in the range of hundreds of millions). The situation of large language models is more unbalanced, they require less computing resources, but need a large amount of data transfer. Even smaller language models (such as models with 600-700 million parameters) are affected by memory limitations.

An effective way to solve these problems is to choose a faster memory interface. It can be seen from Figure 3 that the LPDDR5 memory interface commonly used by edge devices has a bandwidth of 51 Gbps, while HBM2E can support a bandwidth of up to 461 Gbps. Using an LPDDR memory interface will automatically limit the maximum data bandwidth, which means that compared with the NPUs or GPUs used in server applications, the bandwidth given to GenAI algorithms by edge applications will automatically decrease. We can solve this problem by increasing the amount of on-chip L2 memory.Implementing GenAI on the ARC® NPX6 NPU IP

To achieve an efficient NPU design for GenAI and other Transformer-based models, complex multi-level memory management is required.

Synopsys' ARC® NPX6 processor features a flexible memory architecture that supports scalable L2 memory, with up to 64MB of on-chip SRAM. Additionally, each NPX6 core is equipped with an independent DMA, specifically designed for executing tasks such as fetching feature maps and coefficients, as well as writing new feature maps. This task differentiation enables an efficient pipeline data flow, minimizing bottlenecks and maximizing processing throughput. The series also incorporates a range of bandwidth-saving techniques in both hardware and software to maximize bandwidth utilization.

The Synopsys ARC® NPX6 NPU IP series is based on the sixth-generation neural network architecture, designed to support a variety of machine learning models, including CNNs and transformers. The NPX6 series can be scaled with a configurable number of cores, each with its own independent matrix multiplication engine, general tensor accelerator (GTA), and dedicated direct memory access (DMA) unit for streamlined data processing. NPX6 can leverage the same development tools to scale applications from those requiring less than 1 TOPS to those requiring thousands of TOPS, maximizing software reuse.

The matrix multiplication engine, GTA, and DMA are all optimized to support transformers, enabling the ARC® NPX6 to support GenAI algorithms. The GTA in each core is explicitly designed and optimized for efficient execution of non-linear functions, such as ReLU, GELU, and Sigmoid. These functions are implemented using a flexible lookup table approach, anticipating future non-linear functions. The GTA also supports other key operations, including SoftMax and L2 normalization required by transformers. In addition, the matrix multiplication engine within each core can perform 4,096 multiplications per cycle. As GenAI is based on transformers, there are no computational limitations when running GenAI on the NPX6 processor.

In embedded GenAI applications, the ARC NPX6 series will be limited only by the available LPDDR in the system. The NPX6 can successfully run GenAI algorithms such as Stable Diffusion (text-to-image) and Llama-2 7B (text-to-text), with efficiency depending on system bandwidth and the use of on-chip SRAM. While larger GenAI models can also run on the NPX6, they will be slower than when implemented on servers (measured by tokens per second).

Conclusion

As industry professionals continue to explore new algorithms and optimization techniques, and with the support of IP vendors, GenAI will revolutionize the way we interact with devices in the future, bringing us a more intelligent, personalized, and better future.

Comments